When AI Moves Faster Than Customer Trust: What CX Leaders Must Learn from the International AI Safety Report 2026

Ever watched a customer journey break because AI moved faster than your organisation?

A customer opens your app at 11:47 pm.

The chatbot answers instantly. Confident. Polite. Wrong.

It recommends a product already recalled.

It escalates too late.

And, it logs the issue incorrectly.

By morning, your CX team is firefighting.

Legal wants explanations.

Tech says the model behaved “as expected.”

Customers just want accountability.

This is not an AI failure.

It is a governance and experience failure.

And that tension sits at the heart of the International AI Safety Report 2026, the most comprehensive global assessment of advanced AI risks released to date.

For CX and EX leaders, this report is not abstract policy reading.

It is a mirror.

What Is the International AI Safety Report 2026, and Why CX Leaders Should Care?

Short answer:

It is a global, science-led assessment of how advanced AI systems behave, fail, and create systemic risks—many of which show up first in customer experience.

The International AI Safety Report 2026 was led by Yoshua Bengio, with contributions from over 100 experts across 30+ countries, under a mandate shaped by the United Nations, OECD, and national governments including India and the United Kingdom.

While framed as “AI safety,” its findings map directly to CX realities:

- Broken journeys

- Automation without empathy

- Loss of human override

- Fragmented accountability

- Trust erosion at scale

CX leaders sit on the front line of these risks.

Why AI Risk Is Now a CX Risk

Short answer:

Because customers experience AI through touchpoints, not technical safeguards.

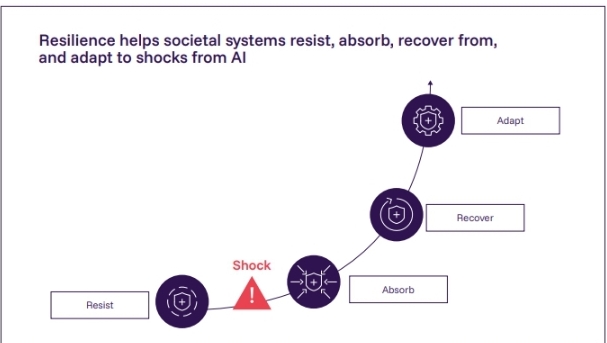

The report categorises AI risks into three groups:

- Malicious use

- Malfunctions

- Systemic risks

CX teams encounter all three, daily.

Let’s translate them into experience language.

Malicious Use → Brand Harm at Scale

AI-generated scams.

Synthetic voices.

Fake support agents.

Customers do not distinguish between “us” and “the ecosystem.”

If your brand touchpoint is abused, trust collapses instantly.

Malfunctions → Journey Breakage

Hallucinated answers.

Inconsistent escalation.

Confidently wrong responses.

From a CX lens, these are not “model errors.”

They are experience defects.

Systemic Risks → Long-Term CX Debt

Over-automation.

Eroded human judgment.

Employees deferring to AI instead of customers.

This creates what CXQuest calls experience atrophy—a slow decay of empathy, discretion, and accountability.

What Is “General-Purpose AI,” and Why It Changes CX Design?

Short answer:

General-purpose AI can handle many tasks, but that versatility makes its failures harder to predict and contain.

The report focuses on general-purpose AI systems—models that:

- Generate text, images, code, audio

- Reason across domains

- Act autonomously through agents

Examples include systems developed by OpenAI, Google DeepMind, Anthropic, Microsoft, and Meta.

From a CX standpoint, this means:

- One model touches many journeys

- Errors propagate across channels

- Ownership becomes blurred

Siloed teams cannot manage systemic AI behaviour.

The Hidden CX Insight: AI Capabilities Are “Jagged”

Short answer:

AI excels at complex tasks but fails at simple, human-obvious ones.

One of the report’s most important findings is capability jaggedness:

- AI solves Olympiad-level maths

- AI fails basic multi-step workflows

- AI sounds confident while being wrong

For CX leaders, this explains:

- Why chatbots ace FAQs but fail edge cases

- Why agents over-trust AI recommendations

- Why escalation logic breaks under pressure

Confidence without reliability is toxic to experience.

Why Traditional CX Governance Fails in the AI Era

Short answer:

Because AI decisions cut across journeys, teams, and timeframes.

Most CX governance assumes:

- Linear journeys

- Clear ownership

- Predictable outcomes

AI breaks all three.

The report highlights an evaluation gap: What AI does in testing ≠ what it does in the real world.

CX leaders feel this gap as:

- Pilots that look perfect

- Launches that degrade silently

- Failures discovered by customers first

A New Framework: The CX Safety Stack

CXQuest proposes a practical translation of the report into experience design.

The CX Safety Stack

1. Experience Intent Layer

What should this AI never do to a customer?

2. Capability Boundaries

Where must AI stop and humans take over?

3. Journey-Level Monitoring

Not model metrics—journey outcomes.

4. Human Override Design

Fast, visible, blame-free escalation.

5. Post-Incident Learning Loops

Treat failures as experience signals, not PR crises.

This mirrors the report’s call for defence-in-depth, but anchors it in CX reality.

How AI Agents Change Employee Experience First

Short answer:

AI agents reshape how employees think, decide, and defer.

The report notes rapid growth in AI agents—systems that:

- Plan

- Act

- Use tools

- Operate with minimal oversight

In CX operations, this shows up as:

- Agent assist tools

- Auto-resolution engines

- Workflow bots

The risk is subtle: Employees stop thinking, start confirming.

This creates:

- Automation bias

- Reduced judgment

- Lower empathy over time

EX degradation precedes CX collapse.

Case Pattern: Where CX AI Goes Wrong

Across industries, CXQuest sees repeat patterns:

Pattern 1: Tool-First AI

AI added before journey clarity.

Pattern 2: KPI-Driven Automation

Cost reduction outweighs trust signals.

Pattern 3: Fragmented Ownership

IT owns models. CX owns complaints. No one owns outcomes.

Pattern 4: No Incident Playbook

Teams improvise when AI fails publicly.

The report confirms these patterns are systemic, not isolated mistakes.

What High-Maturity Organisations Do Differently

Short answer:

They treat AI as a socio-technical system, not a feature.

Advanced organisations align with the report’s findings by:

- Running pre-deployment journey risk reviews

- Defining “unsafe CX states”

- Testing AI under stress, ambiguity, and emotional load

- Maintaining human authority, not just presence

They also accept a hard truth: Some journeys should never be fully automated.

Open Models vs Closed Models: A CX Trade-Off

Short answer:

Open models increase flexibility but reduce control and recall.

The report warns that open-weight models:

- Cannot be recalled

- Are harder to monitor

- Lower barriers for misuse

For CX leaders, the question is not ideology.

It is experience blast radius.

Ask:

- Can we shut this down instantly?

- Can we explain its behaviour?

- Can we trace harm to source?

If not, it does not belong in a frontline journey.

The India Context: Scale Changes Everything

The report explicitly highlights emerging markets, including India, where:

- AI adoption is uneven

- Digital literacy varies

- Scale amplifies harm

For Indian CX leaders, this means:

- One failure reaches millions

- Language gaps worsen hallucinations

- Trust, once lost, is expensive to regain

Safety is not a luxury.

It is a scale prerequisite.

Common Pitfalls CX Leaders Must Avoid

- Mistaking fluency for accuracy

- Assuming pilots equal readiness

- Delegating AI decisions to single teams

- Ignoring employee cognitive load

- Treating safety as compliance

The report is clear:

Voluntary frameworks alone will not hold.

Key Insights for CX and EX Leaders

- AI risk manifests first in customer journeys

- Reliability matters more than intelligence

- Human override must be designed, not assumed

- EX resilience protects CX trust

- Governance must be cross-functional and continuous

Frequently Asked Questions

How does AI safety affect customer experience directly?

AI failures appear as wrong answers, broken journeys, delayed escalations, and loss of trust.

Is AI safety only a regulatory concern?

No. Customers experience harm long before regulators intervene.

Can AI ever be fully trusted in CX?

Trust must be conditional, contextual, and monitored—not assumed.

Should CX teams own AI governance?

They must co-own outcomes, alongside IT, legal, and risk.

Does this slow down innovation?

No. It prevents expensive rework, reputational damage, and churn.

Actionable Takeaways for CX Leaders

- Map AI touchpoints across journeys, not systems

- Define “never events” for AI-driven experiences

- Design visible human override paths

- Train employees to question AI outputs

- Monitor journey outcomes, not model metrics

- Run failure simulations before public launch

- Create an AI incident response playbook

- Treat trust as a measurable CX asset

Final Thought

The International AI Safety Report 2026 does not ask CX leaders to slow down.

It asks something harder.

To grow up faster than the technology they deploy.

If AI moves at machine speed,

trust moves at human speed.

CX leadership is where those two must finally meet.