When AI Becomes the Attack Multiplier: What CX & EX Leaders Must Do Now

You approve a new AI-powered productivity rollout on Monday.

By Thursday, a senior leader receives a flawless email.

Perfect tone. Familiar context. One click away from compromise.

That moment is no longer hypothetical. It’s about Google Gemini.

According to recent findings from Google, state-backed hackers have actively weaponized generative AI—specifically Google Gemini—to accelerate cyberattacks across the full lifecycle.

This isn’t a security story alone.

It’s a CX and EX wake-up call.

Because when attackers move faster, smarter, and more human-like, customer trust and employee confidence fracture first.

What Is Happening—and Why CX Leaders Should Care?

Answer: Nation-state hackers are using generative AI to scale reconnaissance, phishing, and malware development. This raises the risk of trust erosion across customer and employee journeys.

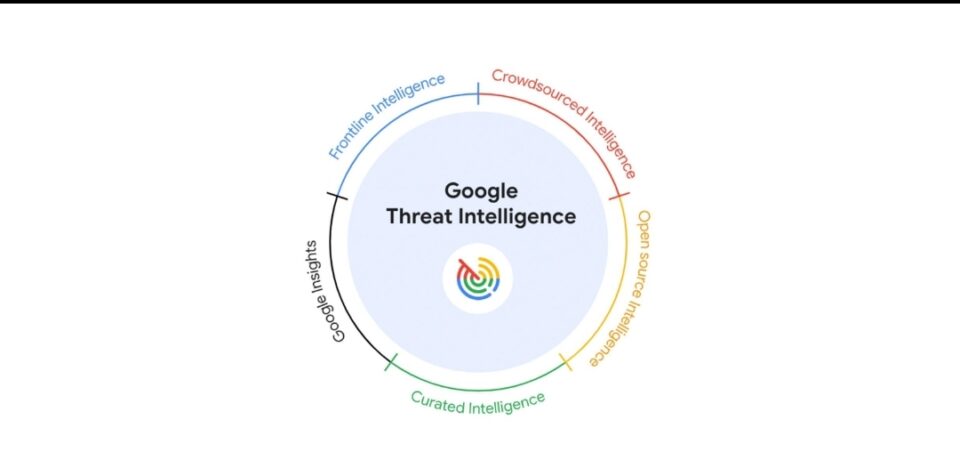

The Google Threat Intelligence Group revealed that threat actors linked to China, Iran, and North Korea used Google Gemini as a productivity accelerator.

Not as magic.

As leverage.

AI didn’t invent new attack types.

It compressed time, removed friction, and lowered expertise barriers.

For CX and EX leaders, that changes the risk equation.

Why This Is a CX and EX Problem—Not Just a CISO Issue

Answer: AI-enabled attacks exploit human trust, role context, and journey gaps—areas CX and EX teams own.

Here’s the uncomfortable truth.

Most modern attacks don’t start with malware.

They start with believability.

Attackers now use AI to:

- Research employee roles and salaries.

- Mirror executive communication styles.

- Craft emails aligned with live initiatives.

- Troubleshoot attack code faster than before.

That means:

- Employees become the attack surface.

- Journeys become the vulnerability.

- Trust moments become the target.

CX and EX leaders sit at the intersection of all three.

How Are State Actors Using AI Across the Attack Lifecycle?

Answer: AI is embedded across reconnaissance, social engineering, development, and execution—shrinking attack timelines.

Let’s break it down.

1. Reconnaissance at Scale

Attackers fed Google Gemini biographies, job listings, and company data.

The model mapped:

- High-value roles.

- Reporting lines.

- Technical access points.

- Likely communication patterns.

What used to take weeks now takes hours.

2. Hyper-Personalized Social Engineering

AI generated phishing messages aligned to:

- Industry jargon.

- Personal interests.

- Active business relationships.

This isn’t spam.

It’s contextual persuasion.

3. Accelerated Malware Development

Threat actors used Google Gemini to:

- Troubleshoot code.

- Analyze vulnerabilities.

- Generate payload components.

One malware framework even used Gemini’s API to return executable C# code in real time.

AI didn’t write the attack.

It removed the bottlenecks.

What Changed Strategically in the Threat Landscape?

Answer: AI has shifted from experimental novelty to operational infrastructure for attackers.

Google’s assessment is precise.

There’s no sudden superweapon.

But there is a step-change in efficiency.

Think of AI as:

- A junior analyst who never sleeps.

- A copywriter fluent in any tone.

- A coder who debugs instantly.

- A researcher with infinite patience.

That’s enough to tilt the balance.

Where CX and EX Teams Are Most Exposed

Answer: Fragmented journeys, inconsistent messaging, and siloed governance create exploitable seams.

From a CXQuest lens, risk clusters around five zones.

1. Siloed Communications

Different tones across HR, IT, and leadership emails confuse employees.

Attackers exploit that inconsistency.

2. Journey Blind Spots

Onboarding, vendor engagement, and role transitions lack clear trust signals.

Those moments invite impersonation.

3. AI Without Guardrails

Teams adopt AI tools without shared usage standards.

Shadow AI creates invisible exposure.

4. Over-Indexed Productivity Metrics

Speed becomes success.

Verification becomes friction.

Attackers count on that tradeoff.

5. Training Focused on Tools, Not Behavior

Security training teaches rules.

Attackers manipulate emotion.

What About Model Extraction and IP Theft?

Answer: Adversaries are probing AI models to replicate reasoning, not just outputs.

Google also documented model extraction attacks.

These attempts used massive prompt volumes to:

- Coerce reasoning disclosures.

- Reconstruct model behavior.

- Transfer capabilities to other systems.

The work involved Google DeepMind.

While average users aren’t directly at risk, CX leaders should note this trend.

Why?

Because:

- AI differentiation erodes.

- Defenses commoditize.

- Attack capabilities diffuse faster.

The gap between defenders and attackers narrows.

What This Means for Customer Trust

Answer: AI-powered attacks increase the likelihood of breaches that feel personal, credible, and brand-aligned.

Customers no longer ask: “Did you get hacked?”

They ask: “Why did I believe it was you?”

That distinction matters.

Trust breaks harder when:

- Language matches your brand.

- Timing aligns with real events.

- Channels look official.

Recovery costs rise.

Reputation damage compounds.

A Practical Framework: The TRUST Layer Model

To respond, CXQuest recommends reframing security through journey trust layers.

T — Tone Consistency

Standardize how authority sounds across the organization.

If tone varies, attackers win.

R — Role Verification

Make role-based requests visible and confirmable.

Especially for finance, IT, and leadership actions.

U — User Education

Train for emotional manipulation, not just phishing indicators.

Explain why messages feel convincing.

S — Signal Reinforcement

Embed trust signals in journeys. Badges, codes, phrasing conventions.

Make authenticity obvious.

T — Tool Governance

Create shared AI usage standards. One playbook. One owner.

Common Pitfalls CX Leaders Must Avoid

- Treating AI risk as an IT-only issue.

- Rolling out AI tools without journey mapping.

- Measuring speed without measuring confidence.

- Training once instead of continuously.

- Assuming employees “will know.”

Attackers assume the opposite.

Key Insights for CX and EX Leaders

- AI amplifies persuasion more than code.

- Trust is now a security control.

- Journey clarity reduces attack surface.

- Consistency beats sophistication.

- Human-centered design is a defense strategy.

This is where CX maturity pays dividends.

FAQ: Long-Tail Questions CX Leaders Are Asking

How does AI-powered phishing differ from traditional attacks?

It adapts language, timing, and context dynamically, increasing credibility and response rates.

Should CX teams be involved in cybersecurity planning?

Yes. CX owns trust moments attackers exploit.

Does banning AI tools reduce risk?

No. It drives shadow usage and fragments governance.

Are customers directly targeted using AI-generated content?

Increasingly yes, especially during billing, support, and account changes.

How often should employees be trained on AI-related risks?

Quarterly, with scenario-based updates tied to real events.

Actionable Takeaways for CX Professionals

- Map your highest-trust journeys end to end.

- Identify where authority signals appear—or don’t.

- Standardize tone and verification cues across channels.

- Partner with security teams on behavioral training.

- Audit AI tool usage across CX and EX functions.

- Redesign onboarding and role-change journeys.

- Build trust reinforcement into every critical moment.

- Treat trust as measurable infrastructure, not a feeling.

AI didn’t break trust.

It exposed how fragile it already was.

For CX and EX leaders, the path forward is clear.

Design trust deliberately—or watch attackers do it for you.